NanoPGD: Minimal Implmentation of PGD 🐼➡️🐒

PGD is the perfect [adversarial] example for a neural networks’ vulnerability. I implemented a compact version of this method, that can be applied to arbitrary images and against SoTA classifiers.

The code in this post is also available in https://github.com/matanbt/demo-pgd

Table of Contents

Some history

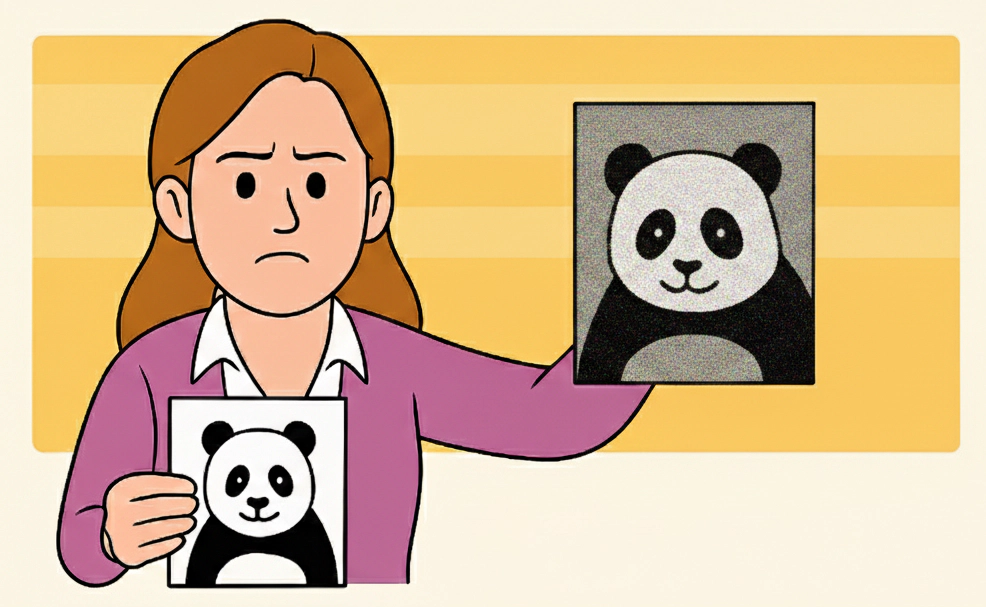

Following the ImageNet moment, and the increasing interest in deep neural network (NN) models for solving tasks in the vision domain, it was also shown that these models possess several odd failure modes. One of which has been around for over a decade – even nowadays, state-of-the-art NN vision models are susceptible to adversarial examples. This concept of vision adversarial example was initially introduced in 2013 (Szegedy et al. ‘13 and Biggio et al. ‘13). This attack slightly perturbs a given image, in a way imperceptible to us humans, but that significantly alters the model’s decision (i.e., the model misclassifies the image).

Projected Gradient Decent (PGD; Madry et al ‘17) is a method for crafting such vision adversarial example (roughly an iterative variant of Szegedy et al.’s initial approach). That is, given an image and an access to a model, it crafts a minimal noise, that when added on top of the image, it leads the model to misclassify it.

PGD is still relevant!

One may think that a decade of adversarial examples would suffice for the community research to mitigate this vulnerability of NNs, but not only are models still susceptible to this issue, but it has been also shown in other modalities, including in tasks for classifying or generating text.

For example, it was shown that for fooling text classifiers one may strategically add/modify few tokens (which is analogous to add a slight noise on image’s pixels). Or, in a more recent example, for bypassing large language models’ (LLM) guardrails one may optimize a short sequence that when appended to a harmful instructions, leads the model off-policy response.

All these adversarial examples attack target a core weakness of NNs: slight change in the input can cause large effect on the output, deviating from the abstract training goal. Of course the notions of “slight change”, “large effect”, or “training goal” vary between modalities, models and tasks, and are often hard to accurately define. For image classifiers, the abstract training goal is to “perfectly” categorize images, and a large deviation from that goal would be making the model misclassify an image with high confidence. For LLMs, the goal is refusing to produce “harmful” responses, and a deviation from that would be leading the model to output an “harmful” response (e.g., materials for building a bomb).

I have found that the existence of adversarial examples against state-of-the-art models often catches practitioners by surprise. Honestly, this was my same initial thought after hearing, for the first time, a talk on these attacks (by now my PhD supervisor) in 2022; then, I approached the lecturer and also asked whether this issue is still relevant (and the rest is history..). I have some thoughts on this misconception and its causes, but I’ll leave them for another post.

The potency, relevancy and the attack,as well as this misconception of ML models’ susceptibility, has led me to write this minimal implementation, that can demonstrate the attack on an model (image classifier) and image of your choice.

What we will implement

PGD is a white-box (i.e., with access to gradients) inference-time (i.e., operates, and can modify, the model input) attack. As such, the attack is given with the model, an image, and a target class; then, the attack returns a slightly modified image (for us humans it will look like image), such the model will misclassify it as target class.

Some technical details:

- We are going to load an image classifier with the popular visio models framework of

timm. - We focus on models finetuned on

ImageNet-1Kdataset, as we would like to target a classifier, and these support the ImageNet classification head. - Specifically, in the example below we target the 1st ranked model in

timm’s leaderboard (as of Feb 2025).

Let’s code.

NanoPGD

In what follows we will run PGD, and make it imperceptibly perturb an image of a dog (Masha) to be classified as a Persian Cat. The following code is self-contained.

Setup

Will start with some setup: doing imports, fetching some metadata and defining the target model and class.

! git clone https://github.com/matanbt/pgd-demo.git

import os

os.chdir("pgd-demo")

from functools import partial

from typing import List

import torchvision

import torch

from PIL import Image

import timm

from tqdm import trange

device = 'cuda'

## Model choice:

MODEL_NAME = 'eva02_large_patch14_448.mim_m38m_ft_in22k_in1k'

# MODEL_NAME = 'resnet50.a1_in1k' # can also try on ResNet

## Target class choice:

DOG_IDX = 166 # Walker hound, Walker foxhound

CAT_IDX = 283 # Persian cat

We also define some functions that will help us later in processing the input image and classes.

# Load ImageNet-1K classes

with open("data/imagenet1000_clsidx_to_labels.txt") as f:

idx2label = eval(f.read())

def get_label_text(idx: int) -> str:

"""

Convert imagenet class (=label) index to class string

"""

return idx2label[idx]

def get_image(_img_tensor: torch.Tensor):

"""

Converts tensor image to PIL image object

"""

if _img_tensor.shape[0] == 1: # hack

_img_tensor = _img_tensor[0]

return torchvision.transforms.ToPILImage()(img_tensor)

def get_logits(_model, _norm_func, _img_tensor, normalize_before=True):

"""

Runs model; applies norm on the input before run, if needed (`normalize_before`)

"""

if normalize_before:

_img_tensor = norm_func(_img_tensor)

return _model(_img_tensor)

Load image and model

# Load attacked image:

img = Image.open('data/masha.png').convert('RGB')

# Load model:

model = timm.create_model(MODEL_NAME, pretrained=True)

model = model.to(device)

model.requires_grad_(False)

model = model.eval()

# prepare model transformations, and separate normalization

data_config = timm.data.resolve_model_data_config(model)

model_transforms = timm.data.create_transform(**data_config, is_training=False)

assert isinstance(model_transforms.transforms[-1], type(model_transforms.transforms[-1]))

init_model_transforms, norm_func = torchvision.transforms.Compose(model_transforms.transforms[:-1]), model_transforms.transforms[-1]

get_logits = partial(_get_logits, model, norm_func)

# transform image to tensor and show it

img_tensor = init_model_transforms(img).unsqueeze(0).to(device)

get_image(img_tensor)

Now, after loading the model and the image, let’s run an initial classification for sanity check:

def print_classification(logits: torch.Tensor):

logits = logits.cpu()

probabilities = (logits.softmax(dim=-1) * 100)[0]

top_probabilities, top_class_indices = torch.sort(probabilities, descending=True)

for rank, (idx, prob) in enumerate(zip(top_class_indices, top_probabilities)):

if rank < 5 or idx in [CAT_IDX, DOG_IDX]:

print(f">> #{rank+1} ({prob.item() :.2f}%):", get_label_text(idx.item()))

# Run classification (before attack):

logits = get_logits(img_tensor)

print_classification(logits)

>> #1 (28.32%): Walker hound, Walker foxhound

>> #2 (12.74%): English foxhound

>> #3 (3.51%): beagle

>> #4 (2.85%): basenji

>> #5 (1.28%): wire-haired fox terrier

>> #894 (0.02%): Persian cat

Great, so the model agree with us that Masha is a dog.

Attack Algorithm

Now for the interesting part, we are going to implement PGD. The pgd function will accept the images and target labels, and will invoke the model iteratively using get_logits. Note that we needed “white-box” access to the model, as we are going to perform a backward path through get_logits, to fetch the gradient of the adversarial loss w.r.t. the image.

def pgd(

images: torch.Tensor, # (n_batch, n_channel, height, width); in range [0,1]

labels: List[int], # (n_batch,)

# attack parameters:

eps: float = 5 / 255, # maximum perturbation

alpha: float = 1 / 255, # step size

steps: int = 100, # number of steps

):

images = images.clone().detach().to(device)

target_labels = torch.tensor(labels).clone().detach().to(device) # (n_batch,)

adv_images = images.clone().detach()

loss = torch.nn.CrossEntropyLoss()

# [OPTIONAL] Starting at a uniformly random point

# adv_images = adv_images + torch.empty_like(adv_images).uniform_(

# -eps, eps

# )

adv_images = torch.clamp(adv_images, min=0, max=1).detach()

for _ in trange(steps):

adv_images.requires_grad = True

logits = get_logits(adv_images)

# Calculate loss

cost = loss(logits, target_labels) # targeted attack; decend on it

# Update adversarial images, while ensuring it remains an image

grad = torch.autograd.grad(

cost, adv_images, retain_graph=False, create_graph=False

)[0]

adv_images = adv_images.detach() - alpha * grad.sign()

delta = torch.clamp(adv_images - images, min=-eps, max=eps)

adv_images = torch.clamp(images + delta, min=0, max=1).detach()

return adv_images

Run Attack

Finally, let’s run the attack

# Run the attack

adv_img_tensor = pgd(img_tensor, [CAT_IDX])

get_image(adv_img_tensor)

100%|██████████| 100/100 [00:54<00:00, 1.84it/s]

As we can see the resulting noise is indeed imperceptible. Now let’s check the classification:

# Run classification (after attack):

logits = get_logits(adv_img_tensor)

print_classification(logits)

>> #1 (97.35%): Persian cat

>> #2 (0.06%): Angora, Angora rabbit

>> #3 (0.06%): tabby, tabby cat

>> #4 (0.04%): lynx, catamount

>> #5 (0.03%): chow, chow chow

>> #950 (0.00%): Walker hound, Walker foxhound

Not only Masha is now a cat, but the model is highly confident on that matter.

The full notebook and code are available in https://github.com/matanbt/demo-pgd